Projects

Here are examples of some things that I’ve worked on, big and small!

🚗A self-driving car that drove us to the park

I founded and led an autonomous driving group called Nova! We built our own self-driving car (custom software and hardware setup). Here’s our car taking us on a picnic.

Johnny, an autonomous reforestation robot

🏆 Winner of the Farm Robotics Challenge's Excellence in Regenerative Agriculture award, 2025

I wrote the software for Johnny, the second reforestation robot developed in the Kantor Lab at CMU. Like Steward (see below), Johnny was deployed in the real world. This time it planted real seedlings with the help of a robot arm. This was CMU's submission to the international 2025 Farm Robotics Challenge, where it won the

🦾Pick and place in a custom simulator

Modeled joint dynamics of xArm 6 in EcoSim, my custom Unity-based robotics simulator. Wrote ROS2 package to calculate IK, plan trajectories, and send joint commands to Unity via TCP.

🌲A fully autonomous reforestation robot for the real world

November 2024

Blog postI managed a 5-person project to design and build a robot that plants trees in deforested areas. We used a Warthog UGV & a custom planting mechanism. I wrote the software, including a full ROS2 stack, custom simulator, a sleek web interface, and assorted firmware.

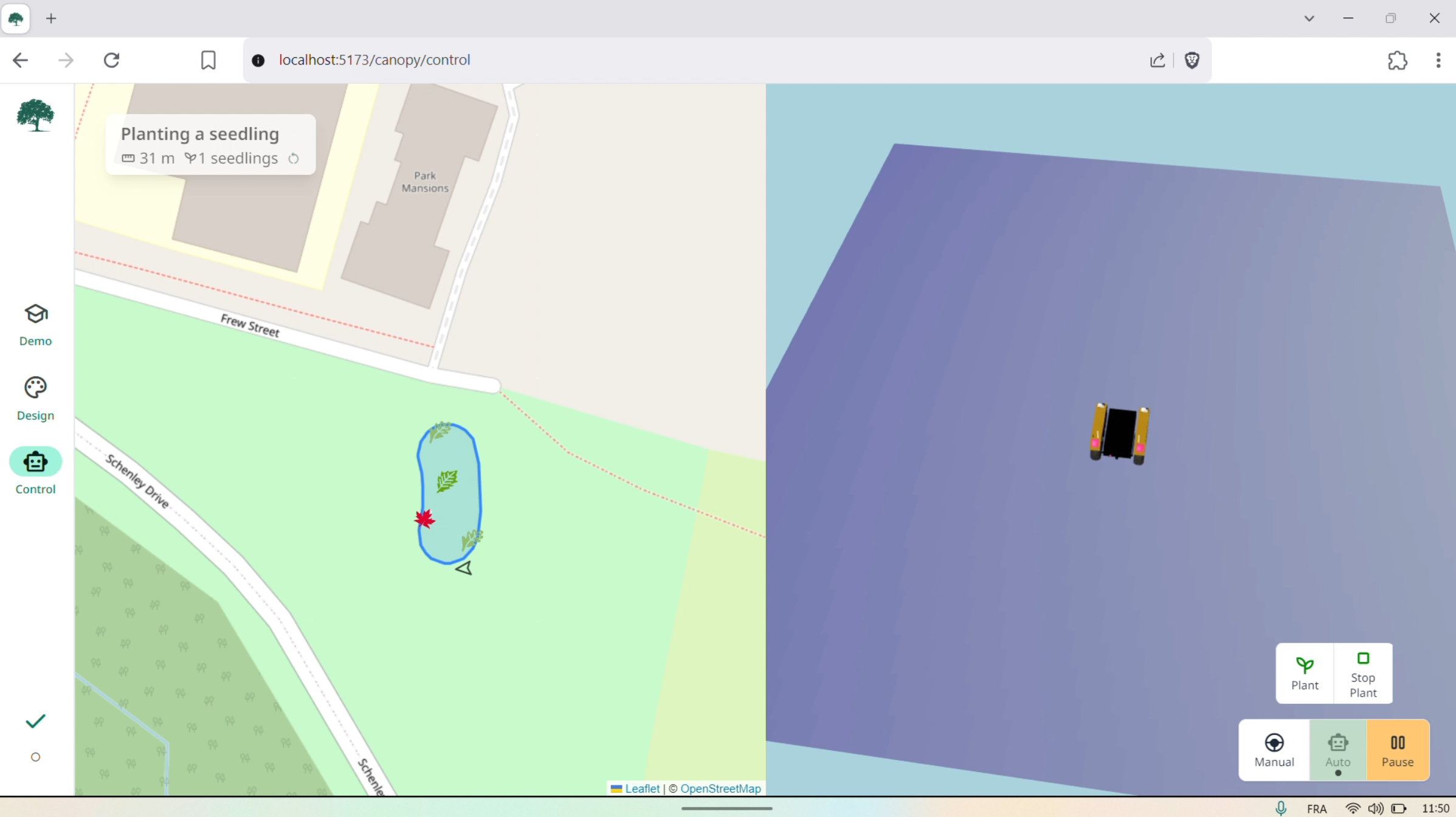

A forest design and robot control tool for the browser

Canopy can instantly generate forest plans using native species-- simply paint where you want your trees to go. It also communicates with a reforestation robot running ROS2 using websockets. Written with three.js, Svelte, SvelteKit, TypeScript, and bits-ui. It includes an interactive demo that guides new users through the forest design process. Successfully tested in the real world, with real foresters who had zero robotics experience!

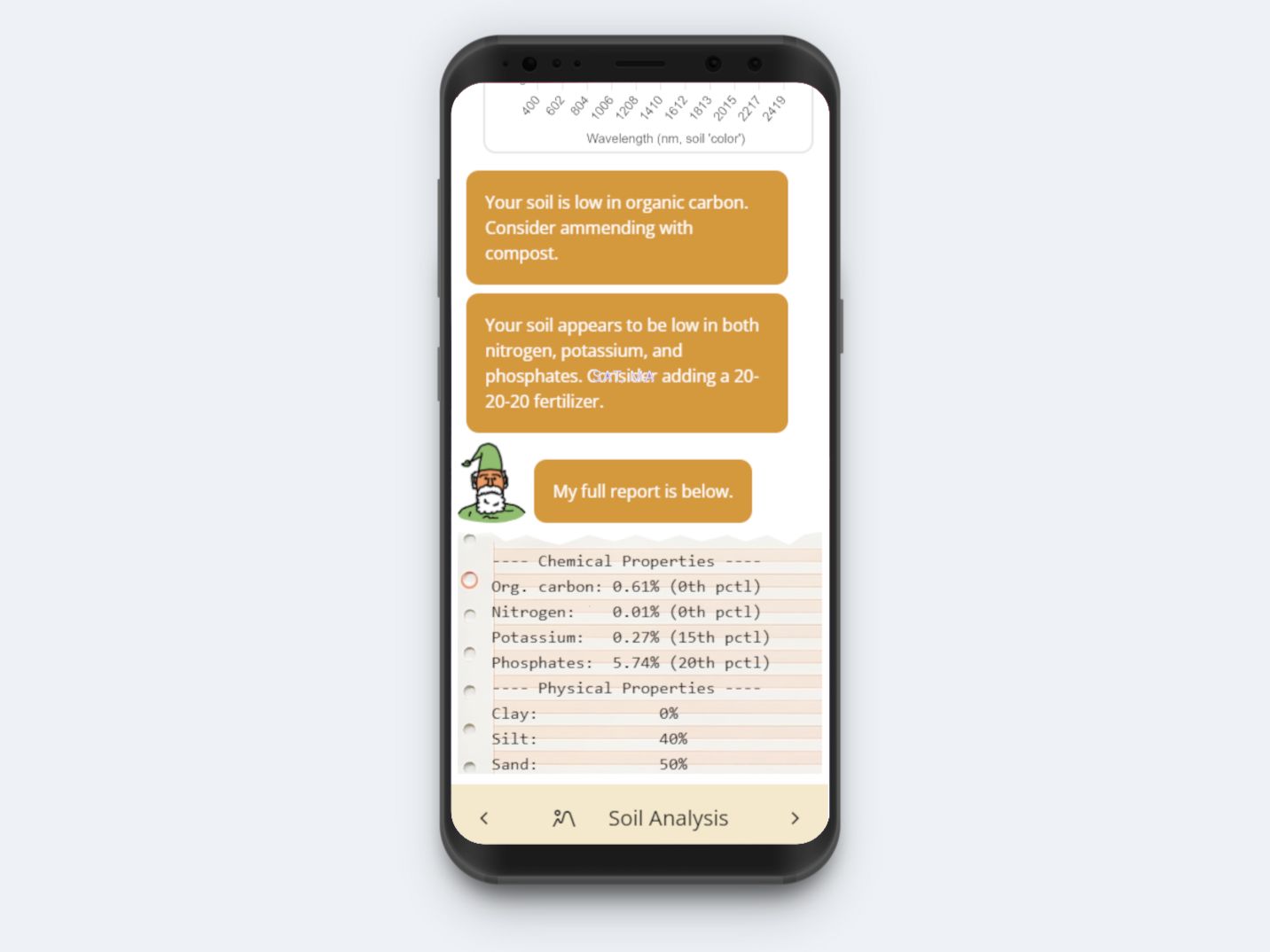

🌱Fast, accurate soil analysis using soil spectroscopy in the browser

As part of a research fellowship in the USDA's AI Center of Excellence, I developed a SOTA soil analysis tool. It uses a custom-trained ML model to predict physical soil properties from a single VisNIR scan of soil. Tests that take days in a wet lab are finished in less than a second.

🪴Plant ID in the browser using on-device AI

I wanted to push the limits of what on-device AI on a smartphone can do. This is a proof of concept of deploying a ResNet-based plant classifier to the browser using Microsoft's ONNX Runtime library. It's fast, accurate, and private.

🦾Inverse kinematics on a Panda arm

February 2024

With my friend Leo, I programmed some inverse kinematics on a Panda manipulator using FrankaPy and MoveIt. The transform tree of the Arm was calibrated using a camera mounted to the robot’s wrist, as well as an Aruco marker on the table.

🕹️Custom robotics simulator using Unity

[This simulator has changed a lot since this video.] The above is a tool that I’m developing for my reforestation robot project at CMU. The Unity-based simulator has a complete bridge with ROS2, so sensor data from the simulator can be easily displayed in Rviz, for example. I wrote custom scripts to simulate camera sensors, GNSS, and more. The terrain is based of of real-world elevation data pulled from the USGS’s National Map Downloader. I’ve designed this tool so that virtual worlds can be easily created based on any location in the world— all you need are the GPS coordinates.

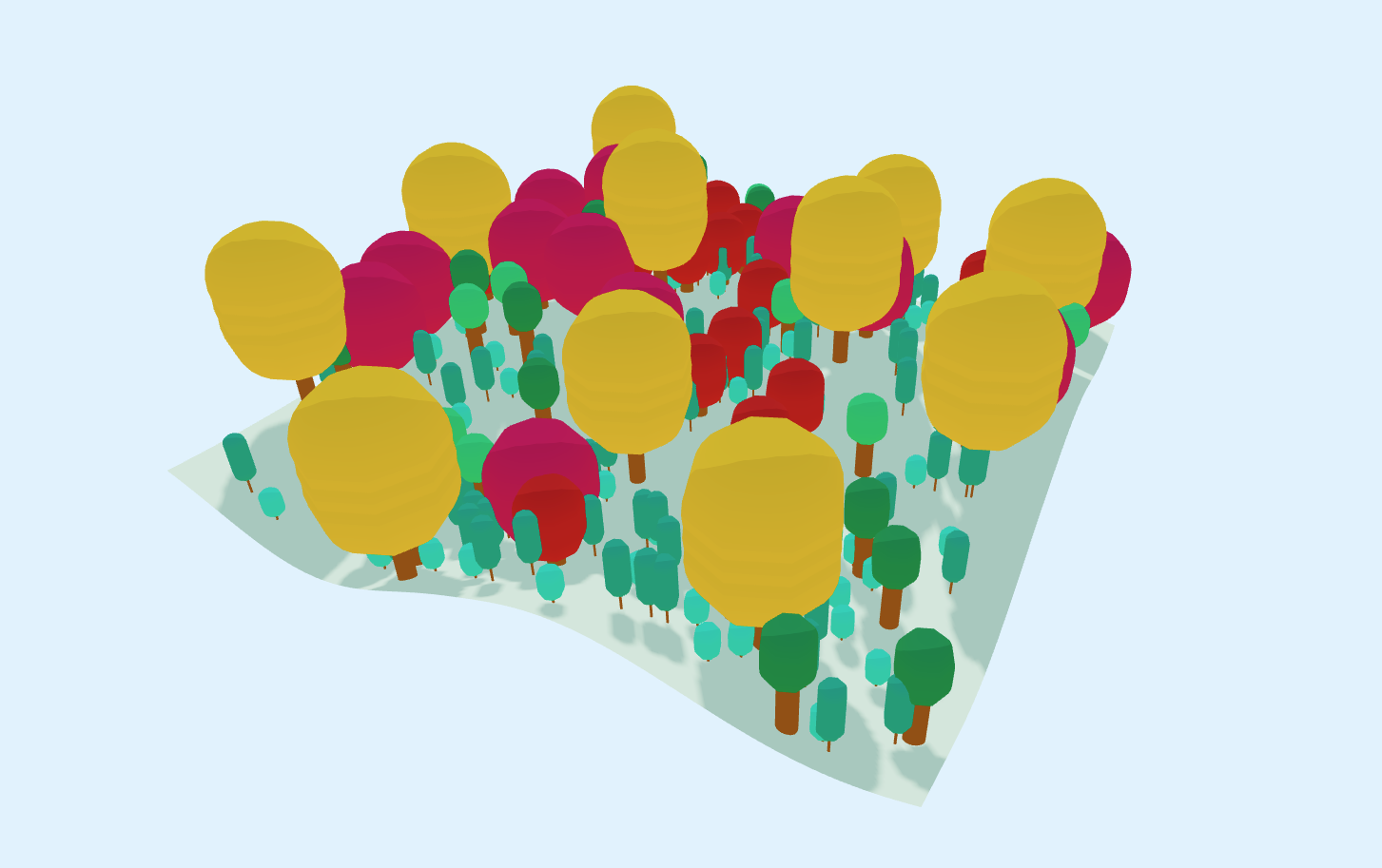

🌳Forest generation in the browser

I’m fascinated with teaching computers to have “green thumbs”— modeling plant dynamics, eventually so that machines can help us grow plants better than ever!

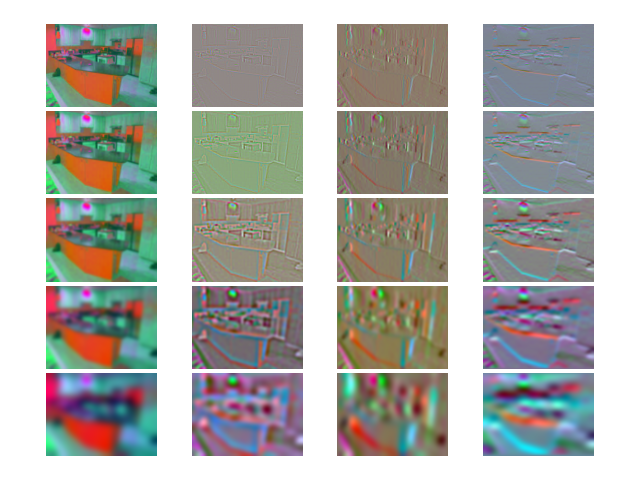

🖼️Scene classification using a retro bag-of-words technique

September 2023

Life existed before CNNs! For my CS class at CMU, I wrote a bag-of-words scene classifier. It uses a series of Gaussian and related filters to convert any image into the frequency domain, then uses the filter responses to construct a histogram. These histograms are clustered, forming a classifier. This technique used to be state-of-the-art. My classifier achieved a whopping 61% accuracy after I tuned the spatial pyramid params.

📷Map-assisted state estimation with semantic segmentation

December 2022

Blog postThe idea here is that, in the event that the signal from a GNSS sensor is lost, or another localization sensor fails, we can use real-time perception data and offline map information to retain localization in an autonomous vehicle. Does it work as well as RTK? No! But it’s a great fallback, and it uses computations that the AV stack is performing anyway, so it’s a great safety system that can run in the background. And it reduced error from a simulated noisy GPS by 47%!